Writing N-Tier apps can get complicated fast. Consider the assortment of n-tier technologies now consigned to the ash heap of history: WCF RIA Services, Self-Tracking Entities, and good old typed DataSets. These have all suffered from lack of interoperability and tight coupling with technologies that were later deprecated. DataSets were coupled to the .NET Framework, RIA Services was tightly integrated with Silverlight, and Self-Tracking Entities bit the dust with EF5 when CodeFirst was released and the team didn’t want to upgrade a solution that was somewhat flawed to begin with.

The problem all these approaches attempted to solve is how to commit a batch of entity updates in a single transaction with a single round-trip to the server. For example, if I have an order with associated details, and I alter the order details by modifying one of the details, removing one, and inserting a new detail, I would like to send the order to a service operation where all the updates can take place atomically. One approach would be to separate out each set of changes and add them as parameters. For example: UpdateOrder(Order order, OrderDetail[] addedDetails, OrderDetail[] modifiedDetails, OrderDetail[] deletedDetails). But that would require me to massage the order by removing all the details and creating different collections of details based on the types of changes, and I would have to cache deleted entities so they could be passed in separately. That involves a fair amount of tedious work, and the service API becomes rather clunky. It would be preferable for my service to expose an UpdateOrder operation that simply accepts an Order with details that have been added, modified or deleted.

public Order UpdateOrder(Order order)

{

// Add, update and delete order details ...

}

The trick is to pass entity state along, but to do so in a way that is technology and platform agnostic and that does not add much overhead to the operation. There is a technology that achieves this goal: OData, implemented by WCF Data Services and OData for ASP.NET Web API. But the intent of OData and Data Services is to expose an Data Model (EF or an alternative source) as a REST-based service and is geared toward rendering data as a syndication feed. While this is certainly a plausible choice, it might be overkill for scenarios where you simple want to expose a set of fine-grained operations.

A simpler and more straightforward approach would be to attach a little bit of metadata to each entity to indicate its state. Then read that state on the server-side, performing all the updates in a single transaction. That is the purpose of my new library and Visual Studio 2012 extension: Trackable Entities.

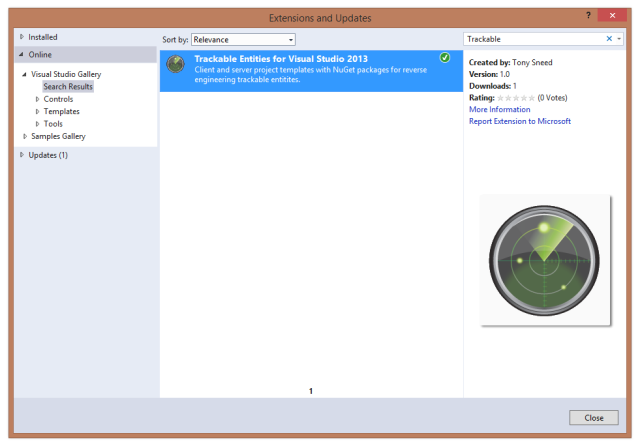

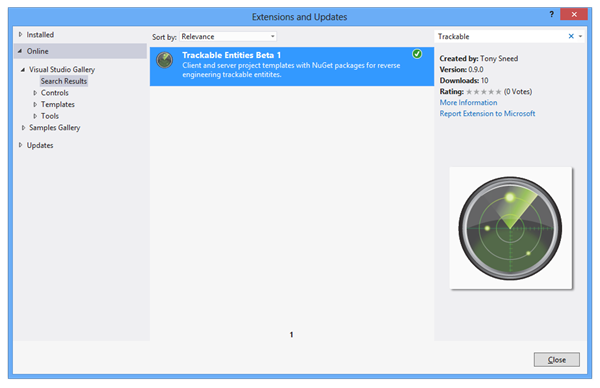

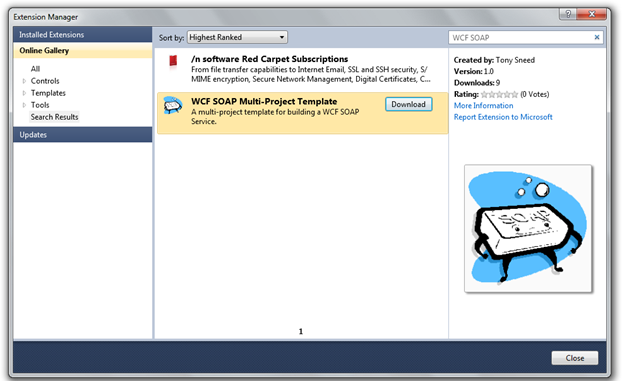

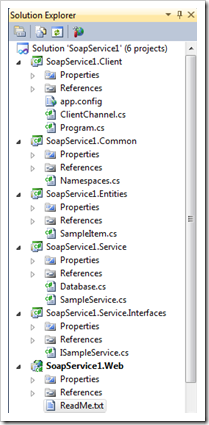

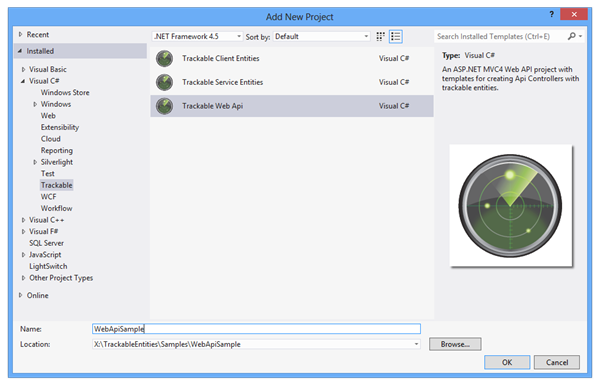

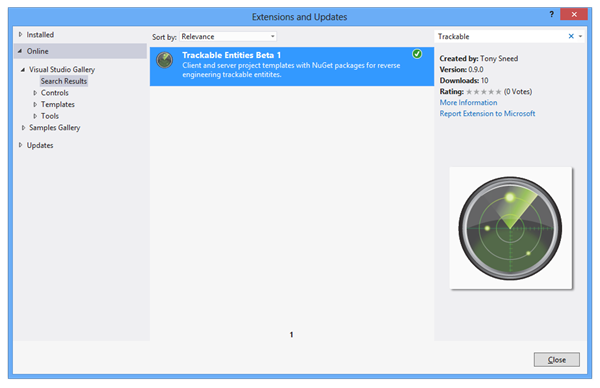

The easiest way to get it is to install it from within Visual Studio: Tools, Extensions and Updates, Online Visual Studio Gallery, then search for “Trackable”. But you can get more goodness by visiting the Trackable Entities CodePlex site where you can download samples and source code. (Note that a prerequisite for the VS extension is the Entity Framework Power Tools.)

Core functionality is contained in a set of TrackableEntities NuGet packages, which provide both client-side change-tracking and a server-side DbContext extension which can walk an object graph and inform the DbContext of each entity’s state so it can be persisted in a transaction when SaveChanges is called. The client Nuget package has a ChangeTrackingCollection<T>, which extends ObservableCollection<T> by monitoring entity changes and marking them as Added, Modified or Deleted. There is a GetChanges that returns a cloned object graph containing only changed entities. The client-side package is implemented as a Portable Class Library which supports .NET 4.5, Silverlight 4-5, Windows Phone 7-5 or greater, and Windows Store applications.

Entity state is tracked by means of the ITrackable interface:

public interface ITrackable

{

TrackingState TrackingState { get; set; }

ICollection<string> ModifiedProperties { get; set; }

}

TrackingState is simply an enum, and ModifiedProperties contains a list of properties that have been updated on an entity, so that only changed properties can be persisted.

public enum TrackingState

{

Unchanged,

Added,

Modified,

Deleted

}

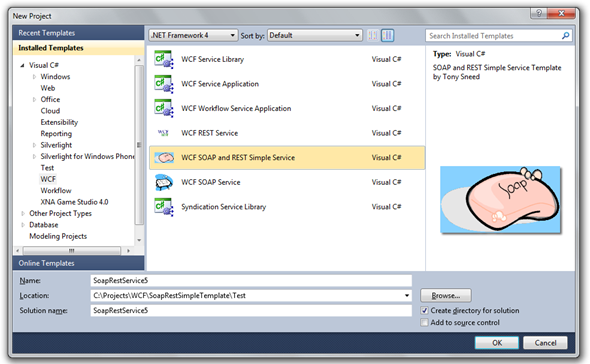

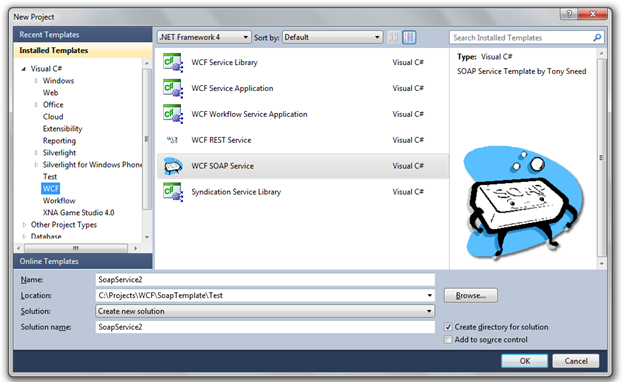

While the NuGet packages provide change-tracking and persistence capability, the sweet spot for productively using these libraries lies in the Trackable Entities Visual Studio 2012 extension, deployed as a VSIX file that installs a number of Visual Studio project templates. First there is a Client Entities template, which creates a portable library and includes a T4 template for reverse engineering client entities using Entity Framework Power Tools. Keep in mind that on the client you will remove all EF-specific classes and packages after running the tool, and there will be no coupling to EF on the client, or any other persistence technology for that matter. (I have submitted a feature request to the EF team for allowing the EF Power Tools to generate entities separately from EF-specific classes, so they can be placed in an assembly that does not reference EF.) Each generated entity implements both ITrackable and INotifyPropertyChanged interfaces to facilitate change-tracking, and child collections are typed as ChangeTrackingCollection<T>, so that they are properly change-tracked. For example, here is the Order entity reverse engineered from the Northwind database.

[JsonObject(IsReference = true)]

[DataContract(IsReference = true, Namespace = "http://schemas.datacontract.org/2004/07/TrackableEntities.Models")]

public partial class Order : ModelBase<Order>

{

[DataMember]

public int OrderId

{

get { return _OrderId; }

set

{

if (value == _OrderId) return;

_OrderId = value;

NotifyPropertyChanged(m => m.OrderId);

}

}

private int _OrderId;

[DataMember]

public string CustomerId

{

get { return _CustomerId; }

set

{

if (value == _CustomerId) return;

_CustomerId = value;

NotifyPropertyChanged(m => m.CustomerId);

}

}

private string _CustomerId;

[DataMember]

public Customer Customer

{

get { return _Customer; }

set

{

if (value == _Customer) return;

_Customer = value;

NotifyPropertyChanged(m => m.Customer);

}

}

private Customer _Customer;

[DataMember]

public ChangeTrackingCollection<OrderDetail> OrderDetails

{

get { return _OrderDetails; }

set

{

if (Equals(value, _OrderDetails)) return;

_OrderDetails = value;

NotifyPropertyChanged(m => m.OrderDetails);

}

}

private ChangeTrackingCollection<OrderDetail> _OrderDetails;

}

ITrackable and INotifyPropertyChanged interfaces are implemented in ModelBase<T>, which has a property NotifyPropertyChanged method that accepts lambda expressions. You might also notice the use of [DataContract] and [JsonObject] attributes, which support serialization of cyclical references with an IsReference property when it is set to True.

On the server side you can use the Service Entities project template to create a .NET 4.5 class library. It also has a set of T4 templates for use with the EF Power Tools that reverse engineer CodeFirst classes and entities that implement ITrackable. It comes with an ApplyChanges extension method to DbContext which walks an entity object graph, reads the tracking state and sets the entity state. It can be tricky to apply state changes in the right order as you recursively traverse an object graph, but the extension method takes care of that for you so you don’t have to worry about it. All you do is call ApplyChanges just before SaveChanges, and you’re good to go.

var db = new NorthwindContext();

db.ApplyChanges(order);

db.SaveChanges();

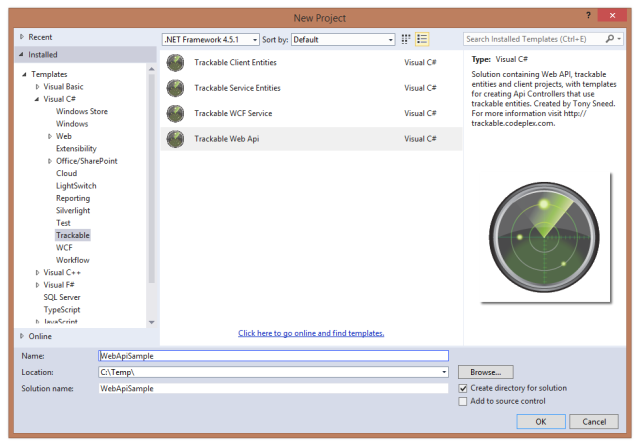

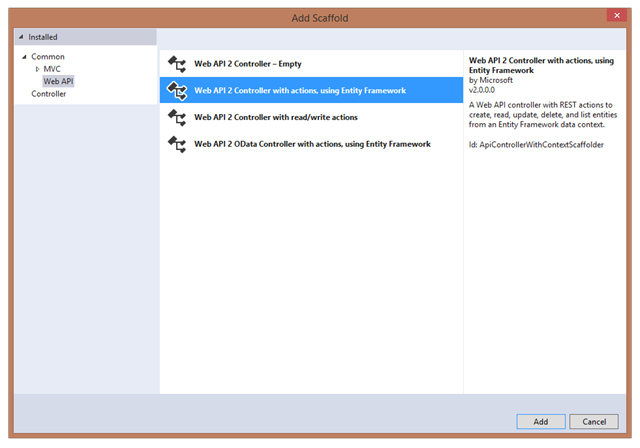

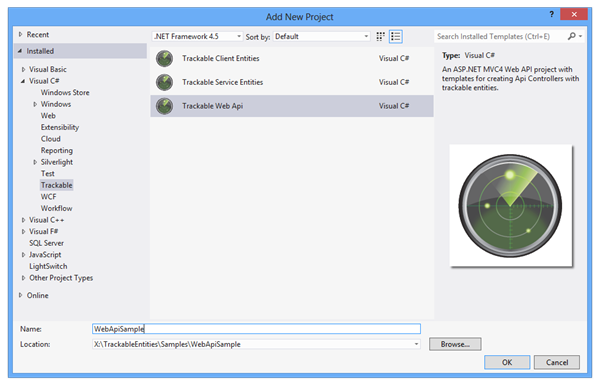

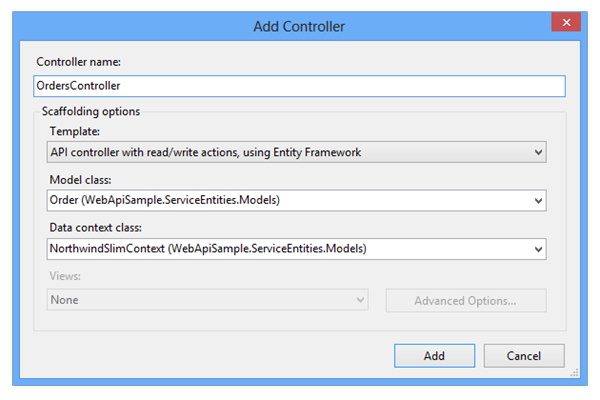

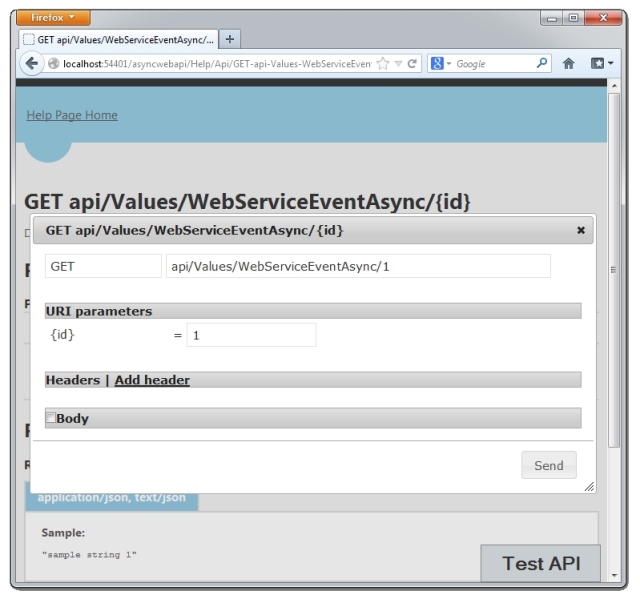

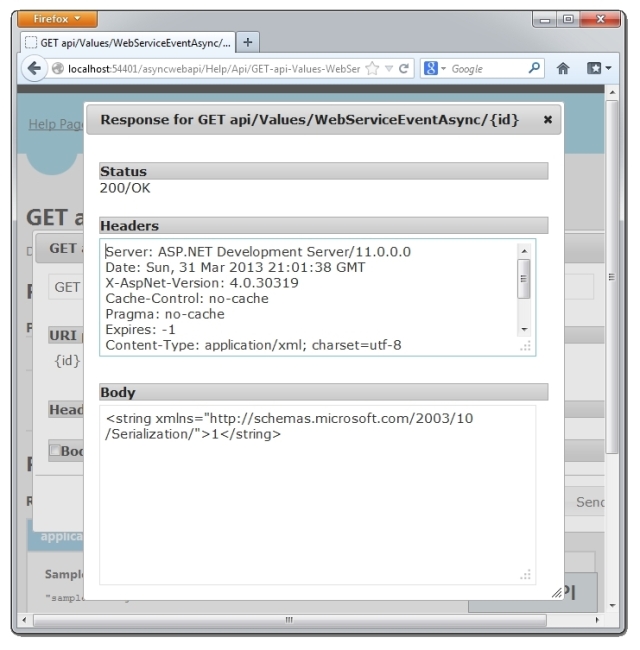

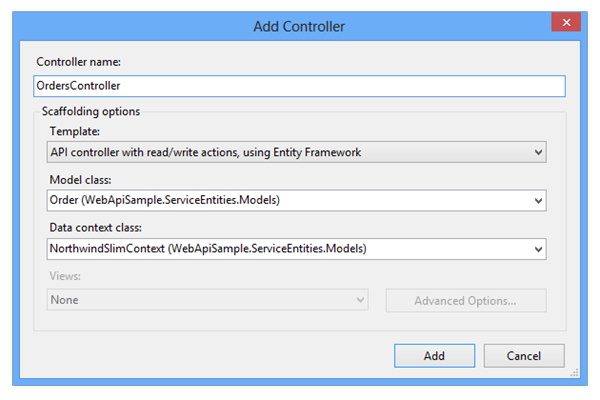

The Client and Service Entities project templates give you an amazing amount of functionality, but there’s more! The Trackable Entities extension also installs a Trackable Web Api multi-project template, which includes both the Client and Service Entities projects, as well as an ASP.NET Web API project with a T4 template that customizes code generation when you add a controller. The call to ApplyChanges is inserted in the right place, and there are other improvements to the default template. For example, the Put method returns the updated entity so that concurrency checks will take place, Post loads related entities, and Delete loads child entities.

The Web API template also includes a console client project that references the Client Entities project and uses HttpClient to invoke service operation on change-tracked entities. It includes a ReadMe file with step-by-step instructions to get you started. Here are some helper methods produced for the console client, which can be ported to any kind of .NET client (for example, WPF, Phone, or Windows Store app).

// TODO: Replace 'Entities', 'Entity', 'EntityId, 'entity' with class name (for ex, Order)

private static Entity GetEntity(HttpClient client, int entityId)

{

string request = "api/Entities/" + entityId;

var response = client.GetAsync(request).Result;

response.EnsureSuccessStatusCode();

var result = response.Content.ReadAsAsync<Entity>().Result;

return result;

}

private static Entity CreateEntity(HttpClient client, Entity entity)

{

string request = "api/Entities";

var response = client.PostAsJsonAsync(request, entity).Result;

response.EnsureSuccessStatusCode();

var result = response.Content.ReadAsAsync<Entity>().Result;

return result;

}

private static Entity UpdateEntity(HttpClient client, Entity entity)

{

string request = "api/Entities";

var response = client.PutAsJsonAsync(request, entity).Result;

response.EnsureSuccessStatusCode();

var result = response.Content.ReadAsAsync<Entity>().Result;

return result;

}

private static void DeleteEntity(HttpClient client, Entity entity)

{

string request = "api/Entities/" + entity.EntityId;

var response = client.DeleteAsync(request);

response.Result.EnsureSuccessStatusCode();

}

I began thinking about trackable entities way back in 2008, when I published an MSDN Magazine article exploring the topic, and then again in 2010 when I updated the code and incorporated T4 templates which read WCF service metadata. These ideas are carried forward with this project, but it is not tied to WCF and allows for any service or persistence framework (it could, for example, be extended for NHibernate and other object-relational mappers). The main design goal is to provide a robust client-side change tracker and code-generation of persistence-ignorant classes (POCO) that carry state information with minimal overhead and are fully interoperable. Service entities are generated independently so they can be mapped to a data context, and they can implement ITrackable without referencing the client library.

The code is fairly stable and there is good code coverage with unit and integration tests, but I’ve released the NuGet packages and VS extension as beta (you need to include Prerelease packages to find it) so that you can start playing with it, and I can respond to feature requests before going RTM. (Note that the EF Power Tools are also in Beta but will be folded into EF6 when it is released.) So please let me know what you think and I’ll do my best to address any issues that come up. Enjoy!